Published: 2021-08-19

NeuralHash, Semantics, Collisions and You (or When is a Cat a Dog?)

When is a cat a dog? Where does one moment stop and another start? These questions have confounded computers since the dawn of time, and today is no different.

Despite what anyone may try to sell you on.

A version of NeuralHash has been extract and reverse engineered. Apple claim that this version is not the final version they will use in their system, but I’m going to assume for the sake of argument that this version is at least related to the version we will eventually see.

There are also fundamental limitations of perceptual hashing that will always be generally applicable and many relate to the observations in this article.

Spot the Difference

Teaching computers to play spot the difference and answering questions about images is a large academic area which has generated a lot of neat datasets. One of my favourites essentially presents almost identical images to a computer with an associated question.

It turns out that NeuralHash performs incredibly interestingly on such a dataset, with large static background and very few - but semantically meaningful - changes.

(Y)in and (Yang): Balancing and Answering Binary Visual Questions: Dataset Peng Zhang and Yash Goyal and Douglas Summers-Stay and Dhruv Batra and Devi Parkh

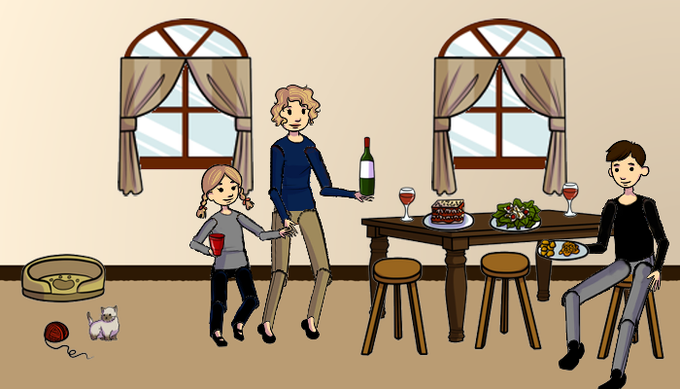

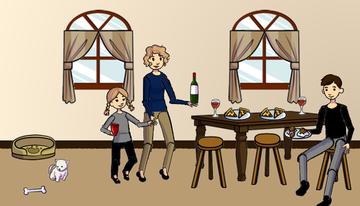

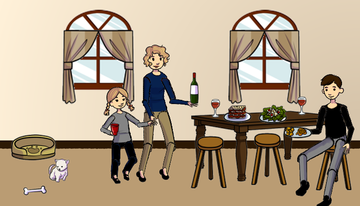

For example, the below images of a cat, and a dog share the same NeuralHash:

It gets worse when we examine the entire image these pictures came from. They all show the same family eating a meal, however in some pictures the family are eating pizza, and in other lasagne. In addition, in one of the picture the dog is replaced with a cat.

All 3 of these images share the same NeuralHash:

To demonstrate that it is not just cartoons and comic strips that have a semantic issue consider the following three image from a baseball game, all with the same NeuralHash:

They are strikingly similar images taken milliseconds apart, but are also critically different. Are they semantically the same image? I can think of arguments for-and-against but NeuralHash has a clear vote here.

Given a perceptual hashing algorithm generating a collision is, by-definition, trivial. The collisions above are trivial, but demonstrate an important concept: visually similar images are not necessarily semantically similar images.

A Note on “Preimage Resistance”

I really don’t think it makes sense to talk about first and second preimage resistance since those properties sonly apply to cryptographic hash functions, and NeuralHash is not a cryptographic hash function.

I keep having to say this…

But since so many people have talked about this work in those terms let me spell it out:

- preimage resistance: given y, it is difficult to find an x such that h(x) = y.

- second-preimage resistance: given x, it is difficult to find a second preimage z ≠ x such that h(x) = h(z).

Both of these have been fundamentally broken for NeuralHash. There is a now a tool by Anish Athalye which can trivially, when given a target hash y, can find an x such that h(x) = y.

https://github.com/anishathalye/neural-hash-collider

Given such a tool constructing second pre-images is trivial, but even without it is important to note that because of the way NeuralHash works it is very easy to construct images such that h(x) = h(y).

Finding and Generating Collisions

Inspecting several thousand images yesterday I came across many very close hashes. When looking closer it is obvious why the algorithm might consider them closer, for example, take the following pictures of some wind surfers and a herd of giraffes:

At first glance they look very different, but take a closer look. Both have a striking line along the horizon and a composition of many small subjects dotted about the image. As a result these hashes differ by only a few bits.

As such it is very easy to make one match the other, or to make both collide to a 3rd hash:

That being said, it is also very easy to make unrelated images collide too…

Further, there have already been natural “random” collisions found in another well known dataset (ImageNet contains naturally occurring NeuralHash collisions) by Brad Dwyer, and I expect more will follow in the coming days.

That is not to say that the discovery of such collisions undermines the numbers that Apple disclosed, we don’t really have enough information to make any kind of judgement at all about those numbers, but the number of collisions so far is within the magnitude of Apple’s high level estimates.

The Difference Between a Parlour Trick, a Curiosity, and an Exploit

Enough said right? Well not really, as funny as many of these collisions are they don’t outright break the Apple threat model.

First, if natural collisions were more common that Apple said they were (1 in 100M empirically or 1 in 1M assumed) there would be an argument that the proposed PSI system is unworkable. Time will be the judge there. There have only publicly been attempts to match the hashes of around 1.5 million images.

In that time we have found numerous visually-similar, semantically-dissimilar collisions and a couple of naturally random collisions and an ever grown number of purposefully generated collisions. Your reaction to these, is going to depend on how you view their importance to the overall system.

I think it is important to break down some of these categories further and try to fully understand the role they will play:

The Problem of Burst Photos

We have shown that burst photos are likely to result in identical perceptual hashes for content that would otherwise get very different cryptographic hashes. “Duplicates” like this are explicitly handled by mechanisms outside the core protocol, so it is really going to come down to how Apple handles these.

The Apple system will deduplicate photos based on an identifier, but burst shots are semantically different photos with the same subject - and an unlucky match on a burst shot could lead to multiple match events on the back end if the system isn’t implemented to defend against that.

The Problem of Cartoons

We have also shown that cartoon and comic book style static background with small semantic changes are also likely to result in identical hashes. This has interesting implications for jurisdictions which can consider cartoons and other constructed image as CSAM, and if the databases do contain such material then there is an implication that matching against it could be much more common that the general average.

The Problem of Adversarial Matches

Finally, we have the problem that most people talk about, constructed adversarial images. In 24 hours we have gone from not understanding NeuralHash to multiple low-noise constructed collisions which match low on automated detection measures.

There is an argument to be made that such images will be easily filtered on the backend, either by computers or humans, but I’m not sure these arguments holds much weight.

First, it is not the images that are encrypted, it is their “visual derivatives” - we are not sure exactly what that means, but in any case it will likely result in the modification of the image prior to encryption, transit, decryption and inspection. Will such a derivative be as easily distinguished in the backend?

Secondly we know that any such second process will likely involve a secondary perceptual hash and human inspection. Given the ease of colliding one kind of perceptual hash it is likely easy to collide a derivative with another (even if “secret”) and even easier to collide images in a way that might trick a human.

Third, even if such images are detectable or filterable it still creates two additional problems for Apple. The first is the presence of malicious or co-opted clients (explicitly ignored in the threat model) in the form of wasted human effort or resource exhaustion. The second is that all this happens once privacy as already been violated.

See: A Closer Look At Fuzzy Threshold PSI

If there is one concept I want you to take away from these notes it is that of semantic similarity.

This is explicitly a system that is intended to semantically distinguish images. Just because images look the same doesn’t mean they are the same. And it doesn’t mean that all humans would judge them as being the same.

Likewise, just because someone has labelled a system as only preventing a certain kind of contraband doesn’t mean the system can only be used to prevent that kind of contraband.