Published: 2022-03-21

A brief introduction to insecurity buttons

Recently I have had a number of conversations with people regarding new features in Cwtch, one common thread throughout nearly all of them was the need to explain the concept of an “insecurity”-button.

Specifically, why a suggested feature idea would necessarily result in a ‘bad’ “insecurity”-button.

Simply put, an “insecurity”-button, in the context of a user-facing application, is a button that, when pressed, makes the user less secure than they previously were.

Probably the most well known example of an “insecurity”-button is the TLS warning pane present in all modern web browsers. These panes usually feature a button (or series of buttons) that allow a user to override a warning and browser the website despite a critical issue with the TLS connection. The ability for users to navigate these safely is still an area of active research..

Reeder, R.W., Felt, A.P., Consolvo, S., Malkin, N., Thompson, C. and Egelman, S., 2018, April. An experience sampling study of user reactions to browser warnings in the field. In Proceedings of the 2018 CHI conference on human factors in computing systems (pp. 1-13).

Why have “insecurity”-buttons at all?

I want to state up front that not all “insecurity”-button are bad. In fact, I would argue the opposite - that they are essential for building secure, consentful software.

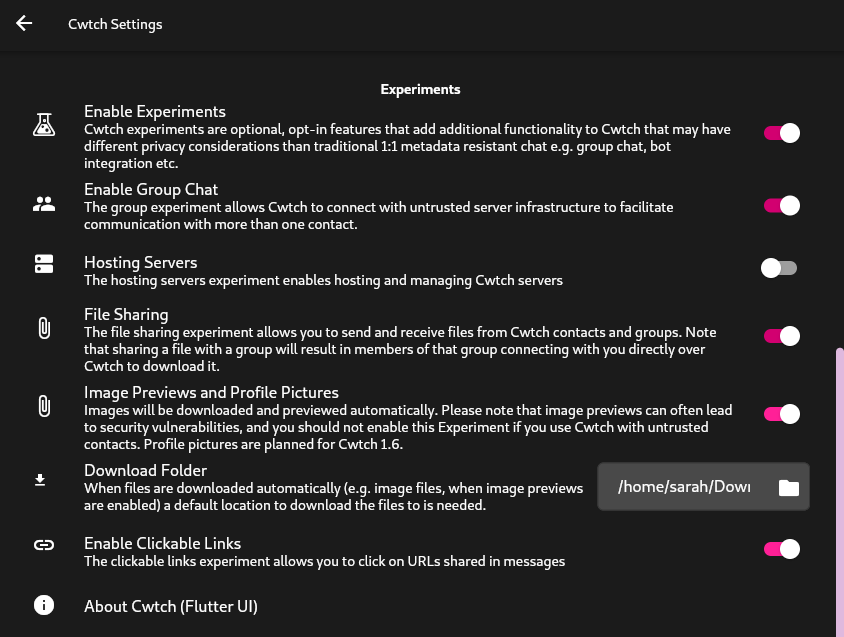

Cwtch has several “insecurity”-buttons under the “Experiments” heading in settings. Experiments enable new functionality at the cost of some quantifiable risk.

Or to put it another way, these “insecurity”-buttons offer users a choice of what features they want to enable, while explaining the additional risks that such features inherently require.

One example is “Enable Group Messaging” which results in access to a protocol which exhibits different metadata privacy proeprties than P2P conversations because group messages require offline delivery, and offline delivery requires the involvement of an untrusted server component.

Another example is “Clickable Links” which causes URLs in cwtch messages to become “clickable” and (after a warning screen) allows opening those links in an external browser - which may reveal untold metadata to the server behind the URL, and many in between.

As a rough guide in Cwtch we believe that “insecurity”-buttons should only result in insecurity that:

- is well-advertised up front

- is consented into

- is reversible

- has a well-understood scope

- and ultimately, serves an important purpose

If an “insecurity”-button would fail any of those points then it is deemed not-suitable for Cwtch. Everything under “Experiments” is strictly opt-in, and limited in scope. Being able to turn on experiments is itself a setting that must be turned on - and doing so is attached to a warning that experiments may have different privacy considerations.

Users can disable any, or all, experiments at any time.

What makes a bad “insecurity”-button?

Fundamentally, if an “insecurity”-button would violate any of the tenets above then it would be a bad idea.

To give an example: I was recently asked about a feature that would effectively create multiple classes of Cwtch Groups with very different censorship and security properties.

The big problem with the idea arose as a consequence of a choice the user would have to make at a server-level i.e. an “insecurity”-button - and more specifically, the scope and reversibility of that choice.

Unlike a regular feature Experiment which all have very obvious UI-level changes, this choice would only really impact the protocol itself, and the results would only be visible is they were explicitly made so by the UI.

The button was also irreversible, once it had been clicked for a given server then the users’ security concerning interaction with all groups hosted on a given server would be forever altered.

It also had significant impact on the security of pre-existing flows such that those flows would have to be altered and amended in order to not compromise their own security.

Ultimately, this button would have been a bad button.